One of the challenges facing disaster risk reduction is the gap between research and practice. Despite the considerable investment in publicly funded and commissioned disaster risk reduction research, the application of research findings to operational practice often lags, if implemented at all. This paper addresses the need to understand the antecedents of implementation and identifies activities involved in the research utilisation process. This paper reports on findings that led to the development of a research utilisation maturity matrix that encompasses four levels of maturity being: basic, developing, established and leading. This study involved collaboration and discussion with emergency services practitioners and a conceptual model of the elements needed to support implementation of research was identified. This model suggests that the four elements play key roles in effective implementation. The study gathered information from emergency services practitioners and their stakeholders about the meaning of the research findings and what, if anything, needed to change. The study’s findings can help emergency services personnel assess organisational practices to improve research utilisation within the emergency sector and contribute to greater disaster risk reduction outcomes.

Introduction

Disaster risk reduction is global in scale and includes many communities and societies. Those communities comprise formal and informal groups and organisations, of which emergency services organisations form one part. Nevertheless, their role in supporting disaster risk reduction is important. It is also important to acknowledge that commissioned inquiry using research is one source of information on how best to sustain or improve practice. In the emergency services sector, there has been a sustained and significant investment in research, as evidenced by the 18 years of Australian Government funding of the Bushfire CRC and the Bushfire and Natural Hazards CRC to improve knowledge and improve practice.

Although using research to inform practice sounds straightforward, as Kay and co-authors (2019) point out, negotiating this in the real world is not as simple as it might seem. This is because researchers produce findings in published papers and these are not easily or directly usable by practitioners. Moreover, decision-makers face barriers to integrate research into practice. In some circumstances, research is disconnected from practitioner experience and lacks credibility. In other cases, research findings are contested on ideological grounds because they do not align with the beliefs of a particular group or organisation. Sometimes research findings are just too costly to implement relative to the proposed benefits.

The need to demonstrate value and effect from research has never been greater. Over the past decade there has been increased scrutiny on emergency management organisations to justify actions (see Eburn & Dovers 2015, Boin & t’ Hart 2010). There is an urgent need for these organisations to ‘learn about learning’ (Adams, Colebatch & Walker 2015) to innovate. One-way to do this is to use research outcomes from their partnerships with researchers and their institutions. This paper reports on what emergency services practitioners can do to use commissioned research to inform and improve the way they do business.

Closing the research-practice gap

Part of the problem is that utilisation of research is assumed to be transferred through passive information-giving (Rogers 2003, Cornes et al. 2019). Labels like ‘research adoption’ and ‘research transfer’ reinforce this view. The approach, which assumes a linear flow of information, is wanting (Baumbusch et al. 2008, Cornes et al. 2019, Kay et al. 2019, Radin Umar et al. 2018). Utilisation from research does not magically flow from research outputs. There is no ‘truth’ out there. For research to be relevant it needs to connect to real-world problems and add value to practitioner and end-user experiences. When there are good links between research and practice, it enables:

- co-creation of new knowledge (Brown et al. 2019)

- increased support of resilience (Doyle et al. 2015)

- better understandings of resilience and enhanced capability (Brown et al. 2019, Vahanvati 2020)

- improved emergency response and management capability (Brooks et al. 2019)

- improved ways to review and evaluate programs (Spiekermann et al. 2015; Taylor, Ryan & Johnston 2020).

Utilising research in emergency services organisations is a social process; one that is supported or resisted by collective beliefs that are held by communities, organisations and societies. Utilising research requires understanding of the conclusions, the context, assessing and evaluating meaning and implications and whether or not a change in practice is worthy or desirable. Any change must be connected to organisational business and strategy.

Standing and colleagues (2016) claim that adopting new practices may be enacted by individuals and teams but must be supported by organisational processes. This includes having resources and organisational structures (e.g. governance, policies) that allow changes based on research to be implemented. Standing and colleagues (2016) also suggest that a new research agenda needs to focus on the antecedents of implementation and the different stages involved in the research utilisation process.

This paper addresses the research question: What are the organisational conditions that facilitate successful implementation of research findings commissioned by emergency services organisations and what are the implications for research commissioned to support disaster risk reduction?

Survey

The survey used for this study is part of a longitudinal study conducted by University of Tasmania on behalf of the Bushfire and Natural Hazards Cooperative Research Centre (BNHCRC) and the Australasian Fire and Emergency Services Authorities Council (AFAC) every two years since 2010. The survey is used to consult with the emergency services sector on research utilisation. Results inform future directions in policy for AFAC and the BNHCRC. The survey includes qualitative free-text questions and quantitative items.

Method

This study involved developing a research utilisation maturity matrix based on most recent survey responses. This was followed by consultative work that was conducted over a 12-month period. This work led to a trial of a self-assessment diagnostic tool used by emergency services practitioners to reflect on how they use research. Drawing on findings from existing research, a conceptual framework is proposed that describes the important processes in utilising research. Case studies were used to explain the model and the role the maturity matrix plays in understanding the different stages in research utilisation maturity. Ethics approval was provided by the University of Tasmania Social Sciences Research Ethics Committee; HHREC H0010741.

Survey questions sought answers on the perceived effectiveness of research adoption within emergency services organisations and assessed and evaluated the effects on agency practice. This included implementing changes, monitoring processes to track changes and communicating outcomes of changes made as a result of research. The survey also compiled participant perceptions of their agency as a ‘learning organisation’. A learning organisation is defined as one where personnel were able to learn from the experience of members of the organisation or emergency services community through processes of reflection, sense-making and action. This develops new ways of acting that can lead to an increased capacity to act differently in the environment through changes in practice (adapted from Kolb 2014). In addition, a number of survey questions were adapted from research investigating barriers to research utilisation (Funk 1999). The results of these aspects of the survey are reported elsewhere (Owen 2018; Owen, Bethune & Krusel 2018).

In 2016 and again in the 2018 surveys, a free-text question sought information on whether participants were aware of how their agency kept up to date with research. If the participant answered ‘yes’ they were asked to provide details. In the 2016 survey, themes from that question were discussed with practitioners involved with the AFAC Knowledge, Innovation and Research Utilisation Network (KIRUN). Based on those discussions, a set of descriptors was used to develop a research utilisation maturity matrix (see Table 1).

For the 2018 survey, and based on collaboration with KIRUN members, these descriptors used in 2016 were included and survey respondents were asked to rate their level of agreement with the statement as something they experienced within their agency (see Table 1). This paper explores those responses.

Table 1: Research utilisation maturity codes and survey responses examples from surverys in 2016 and 2018.

| Level | Description | Examples in data |

| 1 = Basic 2016 n=46 2018 n=29 |

There are pockets of research utilisation, however, these are not systematically organised. Attempts to keep up to date with research depend on efforts by individuals. | Undefined, not clearly communicated within communications. Nil business unit assigned to research and development. …the onus for keeping up to date is largely upon individuals maintaining an interest or subscribing to emails. |

| 2 = Developing 2016 n=46 2018 n=70 |

Some systems and processes are documented that enables research to be disseminated. There is little or no evidence of analysis or effects assessment. | We have two people that email CRC updates to staff. Lots of material is distributed via our portal and email to keep staff and volunteers informed. |

| 3 = Established 2016 n=44 2018 n=22 |

There are systematic processes in place for reviewing research (e.g. dissemination and review either through job responsibilities or an internal research committee). | …developed a research committee. SMEs appointed as capability custodians to ensure up-to-date best practice. |

| 4 = Leading 2016 n=32 2018 n=10 |

There is evidence of using research proactively. Operational and strategic decisions are informed by research using formal research utilisation processes. The processes and systems are widely understood. | … a process of ensuring results are read by key specialist staff involved in program design and delivery, are interpreted and analysed for their implications and relevance and then used to inform decision-making and strategy through numerous internal fora. Alignment of evidence-based decision-making in the planning phases of annual planning and the development of indicators around causal factors that inform emergent risk. |

Procedure

Emergency services organisations across Australian states and territories were identified to take part in the study. An email was sent to heads of each organisation (e.g. commissioner, chief fire officer) inviting their participation and cooperation. The email invitation requested organisations to ensure a survey sample included staff in:

- senior management roles (e.g. communications, training and development, operational community safety, knowledge management, innovation and research)

- middle management roles (e.g. district managers)

- operational and frontline service positions (e.g. volunteers, field operations personnel, community education officers and training instructors).

The introductory email included a link to the survey on the Survey Monkey platform. The email explained that the purpose of the sampling method was important to target personnel who:

- had an understanding of the strategic planning of the agency

- had some awareness and involvement in research activities

- had responsibility for implementing any changes based on research evidence.

Heads of agencies were requested to distribute the survey to 5–15 people in the survey target audiences depending on the size of the organisation. For example, 5 people for small-sized organisations (<1000 personnel), 5–10 people for medium-sized organisations (1000–5000 personnel) and 10–15 people for large organisations (>5000 personnel). Mailboxes were set up for 47 responding organisations. Mailboxes were monitored and reminders were sent until the response threshold was reached or three reminders had been sent.

Participants

A total of 190 participants from 29 organisations across all states and territories completed the 2018 survey. Table 2 shows the demographic details of respondents. To compile the demographic data, a free-text question was ‘What is your role?’ Answers from 122 responses were coded. The median number of years’ experience participants had in emergency services was 19 years and the median years of participant experience in an organisation or agency was 12 years. Table 3 shows the organisational types represented in the survey.

Table 2: Characteristics of participants in the survey sample.

| Participants by role type | Number | Percentage |

| Senior management (e.g. directors) | 11 | 6 |

| Middle management (e.g. district managers) | 70 | 37 |

| Frontline responsibilities (e.g. training instructors) | 41 | 22 |

| Answers not codifiable (e.g. ‘fire’, ‘operations’) | 38 | 20 |

| Not answered | 20 | 15 |

| Total | 190 | 100 |

Table 3: Characteristics of organisations represented in the survey sample.

| Participants by organisation type | Number | Percentage |

| Urban fire services | 15 | 8 |

| Rural fire services | 46 | 24 |

| Land Management | 37 | 20 |

| State Emergency Services (flood and storm) | 23 | 12 |

| Multiple-hazard agencies (e.g. departments of fire and emergency services) | 55 | 29 |

| Specialist agencies (e.g. water utilities, specialist sciences) | 14 | 7 |

Results

Of the 190 total responses, 142 participants provided comments to a question about strategies their organisation had in place to keep up to date with latest research. Answers were coded to four levels of research utilisation maturity as developed in 2016. What is interesting is that participants who provided comments coded at higher levels of research utilisation maturity also reported higher levels of organisational learning and greater agility in overcoming barriers to implementing changes. This was evident in both the 2016 and the 2018 surveys (see Owen, Bethune & Krusel 2018).

Collaboration with the KIRUN led to developing descriptors of research utilisation maturity. Table 4 is a summary of the descriptors for each of the levels of research utilisation maturity presented in Table 1. Descriptors relate to four elements identified as important to support successful implementation of research where a need for change was indicated. The four areas are:

- people and culture

- communities-of-practice

- support systems of governance

- resourcing.

Analysis of the data from the 2018 survey showed that when maturity to use research is low, based on the coded comments, use of research outputs was limited (e.g. products or outcomes ‘sit on the shelf’). They can also be implemented in a fragmented way if tied to one-off projects. When organisational maturity to use research is high, research outputs were discussed and adapted, used in multiple applications and connected to organisational or operational practice.

A conceptual model to implement research findings

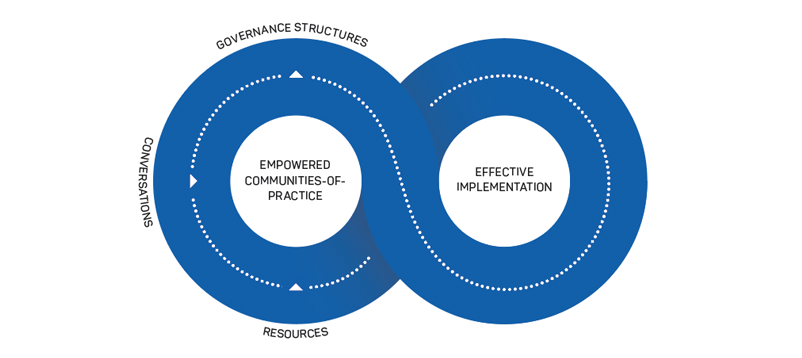

Figure 1 is a model to conceptualise how these elements may work together to support research utilisation that leads to changes in practice to support disaster risk reduction.

Governance structures and resources authorise and support conversations within communities-of-practice so they can adapt and transform findings in ways to fit context. This becomes effective implementation.

Conversations

In the 2018 survey, there were items that highlighted the importance of discussions as enablers of research utilisation. Table 5 shows these items and reports their internal consistency reliability estimates using Cronbach alpha scores (all above the industry standard of 0.7). Table 6 shows the correlation of these items when combined as subscales.

Theoretically, while discussions might be the start of a process, not all discussions will succeed in implementation or utilisation of research, even if the research findings have merit. In conceptualising change in a workplace context, Radin Umar and colleagues (2018) claimed that successful change is dependent on the kind of discussions that occur. They suggest there are qualitative differences depending on whether discussions rely on Type 1 or Type 2 thinking (Kahneman 2011). Type 1 thinking is speedy and automatic, unquestioned and abstract. Type 2 thinking is slow and effortful. While reference to Kahneman (2011) may seem puzzling, the point is that emergency services practitioners are more likely to engage in quick and reactive thinking and dismiss an idea as irrelevant to them, leaving their assumptions and biases untested. The ways in which reactive thinking impedes learning has been demonstrated (Owen et al. 2018). If Type 1 thinking is occurring in discussions, they are likely subject to cognitive bias when individuals select information that reinforces their existing beliefs, leaving their previous assumptions unexamined.

In research examining the challenges of emergency services organisations working with communities, Cornes and colleagues (2019) found that information-giving based on a knowledge-deficit model pervaded the assumptions of practitioners about what is needed for community resilience. This finding is an example of a ‘basic’ level of maturity (see Table 1) when it comes to applying research.

Table 4: Indicators of maturity in research utilisation to support evidence-informed practice.

| Maturity Level: collective capability in utilising research for implementation | ||||

| Element | 1 = Basic | 2 = Developing | 3 = Established | 4 = Leading |

| People and culture | Individuals bring prior skills and find their own professional development. Small pockets of research utilisation value are contested. Limited sharing of knowledge and assumptions remain untested. |

Research utilisation is formally acknowledged but is limited. Limited organisational understanding or support for using research or its implications for practice. |

Inquiry related practices embedded in all or many job roles. A learning culture supports testing existing ways of working. Value of research utilisation is widely acknowledged but limited to ‘safe’ questions. |

Open knowledge sharing and evidence used to improve, adopt, anticipate and question existing understanding and practice. |

| Communities-of-practice (communication and engagement) | Occurs through individuals who use their own resources and networks. | Some end users are engaged but activity is not linked to organisational processes. Communications are one-way. |

Active and widespread engagement. | Proactive integration of research insights into multiple aspects of activity. |

| Support systems (resources) | Limited to individuals and their influence within the organisation. | A research policy or unit exists but is not connected to core business. | Technical systems in place to monitor, review and evaluate. | Support systems are resourced as part of core business. |

| Governance (policies, procedures, doctrine PPD structures and monitoring) | PPD locally organised. Research utilisation is undertaken by individuals as an add-on. Research utilisation is not part of core job. No systematic quality assurance, monitoring and reporting on research utilisation. |

PPD exists but with limited connection to core business. Reactive structures are put in place when a problem emerges. Project-based governance. Some processes exist but are largely spasmodic and unconnected. |

PPD codified, clearly visible and accessible. Research utilisation is strategic, planned and systematic. Research utilisation is monitored and reporting is reasonably established within governance structures. |

PPD embedded with loops to core business. Structures support risk taking and innovation. Research utilisation is monitored and reporting is well established. Governance allows for ‘safe fails’ and transformational change. |

| Implementation of research findings and research output products (e.g. tools, aides-memoire) | Research products sit on the shelf. Some individuals ‘know’ and use the products but information disappears when people leave. | Products are one-off and tied to a specific project. Experience of use is often short-lived and organisational memory of utilisation is partial. Utilisation is not sustained (i.e. does not get built into business-as-usual). |

Products are user-friendly, fit-for-purpose, easily accessible, widely known and actively incorporated into business-as-usual. Products are widely disseminated and resourced and may have a cost-benefit assessment (link to systems). Products are likely used in multiple applications. |

There is active testing and prototyping of products emerging from research outputs. Widespread knowledge and use of products. Products may be tested and transformed and there is application beyond the organisation. |

Cornes and co-authors (2019) proposed that emergency services personnel need to better understand human rationality and why people think the way they do. This would assist in moving to a higher level of research utilisation maturity. In doing so, facilitators of discussions can assist if they create the conditions for Type 2 thinking (Kahneman 2011). This requires a slowing down of default thinking processes to one that is deliberate, effortful, logical and conscious. Radin Umar and colleagues (2018) suggest this may assist practitioner perceptions and attitudes and, ultimately, the acceptance and adoption of new ideas. This can be modified during the sense-making iteration process.

If discussions support slow thinking to cycle through iterations of processing information and meaning-making, practitioners are more likely to arrive at a deliberate conclusion rather than a default, reactive approach, which has been identified as impeding practitioner learning (Owen et al. 2018). In addition, face-to-face discussions provide a richer environment where participants can detect body language or other visual cues and use this to process meaning or disagreement. It is also important that facilitators of discussions about research findings be mindful of who is part of the conversation and who is not. Inequality and aspects of power need consideration if discussions are inclusive. These conversations are more likely to empower communities-of-practice through greater awareness of collective efficacy.

Figure 1: Conceptual model of implementing change from research knowledge.

Empowered communities-of-practice

Taylor, Ryan and Johnson (2020) examined how community engagement can be evaluated. They noted that ‘conversations with members of the public were valuable tools to determine the overall success of community engagement programs’ (p.49). The authors concluded that a community-of-practice approach enhances community engagement evaluation. This is consistent with findings in this study where a number of items indicated high agreement with indicators of enabled communities-of-practice (Table 5) were associated with higher indicators of research implementation (Table 6).

Drawing on learning theory (Argyris & Schon 1974), communities-of-practice are empowered when they are able to move through three stages of learning to reflect on their practice and how new knowledge may be applicable. Argryis & Schon (1974) identified three levels of learning. Third-order learning occurs when stakeholders critically reflect on their learning and generate new modes of acting.

Governance and resources

The capability to mobilise resources and orchestrate actions is an important determinant of effective implementation (Weiner 2009). Research undertaken to develop the research utilisation assessment tool found associations between how survey respondents reported their agreement with indicators of governance and resourcing (see Tables 5 and 6).

Conceptually, governance and resources are determinants of implementation in that they authorise and make visible the work that is undertaken. When there are governance processes in place, activities associated with research utilisation are codified, linked to the business and monitored. Without these processes, research utilisation relies on passionate individuals whose actions are lost from corporate memory once those individuals leave. Implementation does not rely solely on whether these organisational systems are present. When collective efficacy is weak, then implementation, regardless of governance processes or resources available, is likely to be resisted. If implementation of changes arising from research findings is enacted it is likely to demonstrate compliance rather than commitment. When commitment and collective efficacy is high, resources will be used skilfully and efforts may exceed those listed in job functions.

Table 5: Survey indicators used to develop the conceptual model of research implementation.

| Indicators | Items included in the survey |

| Conversations (n=4, a=0.851) | There are frequent discussions of the implications of research knowledge. Conversations about evidence-based practice informs decision-making. The organisation culture values research and its use. There is active and widespread engagement in utilisation and learning activities. |

| Communities-of-practice (n=4, a=0.863) | People transform research products to suit multiple applications. Testing research findings includes processes that trial new practices and allows for ‘safe fails’. There is active participation in testing and prototyping research products to make them suitable for the context. Research is about solving problems and ‘problem seeking’ to proactively explore and develop solutions. |

| Governance (n=3, a=0.809) | Responsibility for using research is formally embedded in job roles. There are structures (e.g. research committees) that review and monitor research utilisation. Reporting processes are well established. |

| Resources (n=3, a=0.879) | Resources are available to drive change based on research and to make changes part of core business. There are resources available to implement changes needed to use research based on findings. Resources are in place for individuals to participate in professional development events. |

| Implementation (n=4, a=0.853) | Research products are incorporated into business-as-usual. Research products are embedded into training, guidelines or doctrine. The agency is able to implement changes that may be needed. The agency is able to assess and evaluate the impact on practice of the research. |

Table 6: Correlations between items included in Table 5 as subscales.

| Pearson correlations | Conversations (n=116) | Communities-of-practice (n=96) | Governance (n=96) | Resources (n=103) | Implementation (n=100) |

| Conversations | 1 | 0.749** | 0.660** | 0.786** | 0.631** |

| Communities-of-practice | 1 | 0.632** | 0.693** | 0.607** | |

| Governance | 1 | 0.590** | 0.524** | ||

| Resources | 1 | 0.691** | |||

| Implementation | 1 |

** Correlation is significant at the 0.01 level (2-tailed).

Conclusions and limitations

This paper discussed previous empirical work as well as co-construction work with the KIRUN to develop a research utilisation maturity matrix. A self-assessment tool, based on the matrix, allows practitioners to diagnose the stage of their organisation in terms of organisational capacity to utilise research. To address research questions, a conceptual model was proposed to illustrate how organisational elements work together to accelerate implementation of research outputs. While there are gaps between research and practice, closing gaps that support disaster risk reduction is more urgent.

Figure 1 suggests that a critical and often overlooked component of research implementation is the collective beliefs of end users. For this study, the focus was on the perceptions of emergency services practitioners and the findings of research relevant to them. Figure 1 suggests that enabling critically reflective discussions that unpack collective beliefs and test assumptions is an important step in implementing research. This may provide insights for changes in emergency services practice.

The findings here provide ways that emergency services personnel can assess their organisation’s practices related to research utilisation. They can also use the maturity matrix to identify steps needed to move along the path towards research implementation. This study supports the work of others (e.g. Radin Umar et al. 2018) that conversations are an important starting point. Implementation of research is not content-specific but is context-specific. Similar to others (e.g. Taylor, Ryan & Johnson 2020), a staged approach is needed.

This research area has limitations. At present, this conceptual model has been empirically derived and needs further testing. Associations exist between key indicators but this does not support causation. The assessment tool explained in this paper has been adapted for the needs of disaster risk reduction researchers but the content is preliminary and speculative. More needs to be understood about how stakeholders successful implement research. This would help to identify the enablers and barriers that exist to ensure effective use of research and to accelerate courses of action.