Abstract

In emergency management organisations, the drive to use research to inform practice has been growing for some time. This paper discusses findings from a survey used to investigate perceived effectiveness of a number of important processes in research utilisation. In 2016, a survey was completed by 266 respondents in 29 fire and emergency services agencies. Questions sought answers on perceived effectiveness in disseminating research within agencies, assessing and evaluating the impacts on agency practice of the research, implementing agency changes that may be needed, monitoring processes to track changes and communicate outcomes of changes made as a result of research. The study found that there were differences in levels of perceived effectiveness between those in senior management and front-line service positions. The differences suggest that front-line services personnel have lower levels of perceived effectiveness in how research is disseminated. The study also found agencies had different approaches to keep up-to-date with research advances. An examination of the activities identified four developmental levels of research utilisation maturity. The findings suggest more work is needed to better understand the enablers and constraints to utilising research to support development of evidence-informed practice.

Introduction

Research utilisation is critical not just for organisational growth, competitiveness and sustainability (Standing et al. 2016) but also for wide-scale sector development, community and economic wellbeing (Cutler 2008, Ratten, Ferreira & Fernandes 2017). In many countries collaboration and innovation are supported by government policies and initiatives that fund cooperative research centres to take a collaborative approach to research and development. These research centres produce ideas and outputs that can be adopted by organisations and used. However, research examining how research outcomes lead to innovation, including enablers and constraints, appears limited to the medical field in general (Elliott & Popay 2000, Kothari, Birch & Charles 2005) and nursing in particular (Brown et al. 2010, Carrion, Woods & Norman 2004, Retsas 2000).

This paper considers this gap for the fire and emergency services sector and investigates the approaches to using research outputs to inform work practice. The emergency services sector gains insights from research undertaken through a range of sources such as direct commission and academic institutions, as well as through bodies such as the Australasian Fire and Emergency Services Authority (AFAC) and the Bushfire and Natural Hazards CRC (CRC).

Emergency services organisations currently grapple with complex and ‘wicked’ problems (Bosomworth, Owen & Curnin 2017). When engaging with cooperative research centres agencies typically ensure that the research being undertaken is aligned to their needs. Over the past decade there has been increasing scrutiny on these organisations to justify actions (e.g. Eburn & Dovers 2015, Boin & t’Hart 2010). There is an urgent need for these organisations to develop their evidence-informed practice. One way to is to actively use research outcomes from their partnerships with cooperative research centres.

Literature review

The value of utilising research is well established (e.g. Brown & Frame 2016, Cutler 2008, Dearing 2009, Janssen 2003). When research utilisation is done well it enables:

- the pace of adoption processes to be accelerated (Helmsley-Brown 2004, Marcati, Guido & Peluso 2008)

- the number of adoptions possible from conducted research to be increased (Dearing 2009, Retsas 2000)

- the quality of research implementation to be enhanced (Janssen 2003, Kothari, Birch & Charles 2005)

- the use of worthy innovations (Glasgow, Lichenstein & Marcus 2003, Standing et al. 2016)

- the research effectiveness at agency and sector levels to be demonstrated (Elliott & Popay 2000).

Research is only one of several ingredients for successful innovation and, in many respects, only the start of the process. Utilisation from research does not magically follow from research outputs. What is needed is a systematic follow through from research insights to consider the implications and to develop processes that support review and, where needed, implementation and change.

Studies of utilisation and the barriers that need to be overcome (e.g. Funk et al. 1991, Cummings et al. 2007, Brown et al. 2010) suggest that research is used through a process by which new information or new ideas are communicated through certain channels, over time and among members of a social system. The process includes:

- disseminating new ideas or findings among members of a social system (Hemsley-Brown 2004, Brown & Frame 2016)

- assessing and evaluating the ideas in terms of their relevance to members of the social system (Carrion, Woods & Norman 2004, Dearing 2009)

- implementing changes that may be needed (Brown et al. 2010, Elliott & Popay 2000)

- monitoring the effects of the changes put in place (Cummings et al. 2007, Cutler 2008)

- reporting outcomes of changes made as a result of the new idea (Glasgow, Lichtenstein & Marcus 2003, Standing et al. 2016).

Research utilisation occurs through social interaction and the development of shared understanding as well as organisational processes to embed new ideas into work practice.

This brief review shows that a better understanding of the processes to utilise research is important, especially if emergency services organisations are to maximise investment and engagement with cooperative research centres.

Method

A survey was distributed in 2016 to heads of emergency services agencies seeking a stratified sample of personnel within the agency. This included those working at:

- senior management levels including the most senior person in the organisation responsible for communications, training and development, operations, community safety, knowledge management, innovation and research

- middle management levels including regional, operational and non-operational personnel

- operational or front-line service positions (e.g. field operations personnel, community education officers and training instructors).

In the survey ‘research’ was defined as a systematic approach to answering a question or testing a hypothesis using a methodological study. The researcher enquires into a problem, systematically collects data and analyses these to develop findings to advance knowledge. Doing research in this way is distinguished from gathering general information by reading a book or surfing the internet. ‘Research utilisation’ was defined as the process of synthesising, disseminating and using research-generated knowledge to make an impact on or change the existing practice. Respondents were asked to consider research that may have come from a source internal to their organisation (conducting its own research) and from an external source, such as cooperative research centres and other research institutions.

In the 2016 sample, 50 agencies were invited and 266 responses were received from 29 organisations. The agency participation rate (58 per cent) is appropriate for online surveys of this type (Barach & Holtom 2008). The median number of years that survey respondents have been in the sector was 22 and the median number of years within the agency was 13; demonstrating the level of experience in emergency services of those responding.

There was a reasonable spread of participation from the kinds of agencies included in the sector with the exception of urban agencies, where only one agency participated yielding 12 (five per cent) of responses. Most of the responses came from people participating in agencies that have multiple hazard roles (n=77 or 35 per cent). This indicates the structural shifts occurring within the sector as well as a broadening of the stakeholder base. Participation from rural agencies was well represented (n=52 or 21 per cent). Land management agencies (n=37 or 15 per cent), State Emergency Services (n=35 or 14 per cent) and agencies with other roles (e.g. critical infrastructure, humanitarian, specialist science roles (n=38 or 15 per cent).

Of the respondents who answered the question about their position in the agency, 29 (15 per cent) were in senior management positions (e.g. directors), 128 (66 per cent) were in middle management roles (e.g. district managers) and 37 (19 per cent) had front-line responsibilities (e.g. training instructors).

The survey consisted of a number of quantitative Likert-type questions where respondents were asked to rate their level of agreement on a scale of 1 to 7, with an option for ‘can’t answer’. In addition there were qualitative questions inviting comments. One in particular is discussed in detail here. The qualitative responses to the question ‘What strategies does your agency have in place to keep up-to-date with research?’ yielded comments from 168 respondents. These were initially coded and discussed between two coders. A sample of 30 comments was coded to develop a framework to discuss. Once the coders achieved an inter-rater reliability of 88 per cent the rest of the comments were coded to four identified themes.

Limitations

While there are processes in place to ensure that the research being undertaken is addressing a gap in knowledge, what has not been discussed is if that research is the best available to advance societal goals (Sarewitz & Pielke 2007). The focus in this paper has assumed that those processes are already in place between agencies and their research suppliers.

The qualitative framework of research maturity (discussed below) is based only on what the participant had recorded, meaning that a respondent’s agency may be more active but this was not articulated in the comment.

Results

Perceived effectiveness of research utilisation processes

Respondents were asked to rate the perceived effectiveness of their agency in terms of its processes to:

- disseminate research within the agency

- assess and evaluate the impact on agency practice of the research

- implement any agency changes that are needed

- establish monitoring processes to track changes

- disseminate the outcomes of changes made as a result of research.

Differences were found in the ways respondents rated their levels of satisfaction on these items. On average respondents rated their agency’s effectiveness in ‘Assessing and evaluating the impact of research in agency practice’ significantly lower than they did its effectiveness in disseminating Bushfire CRC research.1 In addition ‘Putting in place processes to monitor and track changes’ was also significantly lower.2 This indicates that while there are higher perceptions of effectiveness with the ways in which personnel receive information about the research, there is less satisfaction with effectiveness in considering the implications or implementation.

Given the sustained effort that the CRC and AFAC have put into packaging materials to make dissemination a relatively straightforward and accessible process for agencies, this may indicate that similar resources and tools are required to help agencies undertake other aspects important in the utilisation process.

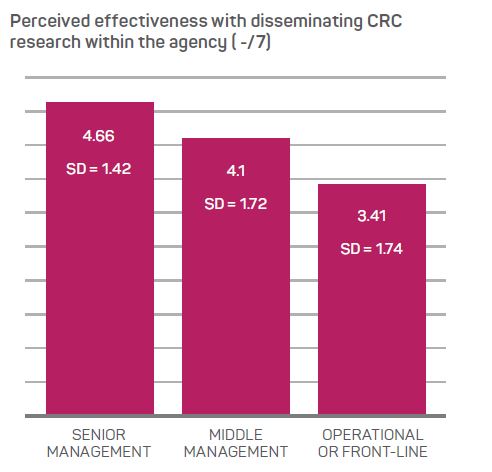

What is interesting is that while levels of perceived effectiveness with disseminating research were high overall, there were differences based on the hierarchical role the respondent had in the organisation. Figure 1 shows the averages and standard deviations for senior managers, middle managers and those working on the front-line. There was a significant difference between senior managers and front-line personnel.3 The difference suggests that front-line services personnel lower levels of perceived effectiveness in how research is disseminated. This has implications for their roles as these personnel are expected to translate research outcomes into practice (e.g. training and community outreach programs).

Figure 1: Mean differences with perceived effectiveness with disseminating research within the agency for senior, middle management and front-line services personnel.

Keeping up-to-date with research

Respondents were asked to provide comments on the ways they knew of to keep up-to-date with research. This is a first step to then being able to consider implications for agency practice and whether or not anything needs to change. There were 168 respondents who provided comments in the 2016 survey. These included comments in relation to participating in CRC or AFAC events, such as attending a conference or Research Advisory Forum as well as participating in the research project team as an end-user.

Table 1: Research utilisation maturity codes and examples.

|

Level |

Description | Examples in data to question (if yes) what strategies does your agency have in place to keep up-to-date with research? |

|

1. Low (Basic) N=39; (24%) |

Systems are ad hoc and unsystematic. Attempts to keep up-to-date with research depend on individual effort. |

‘Undefined, not clearly communicated within communications. Nil business unit assigned to research and development.’ ‘…the onus for keeping up-to-date is largely upon individuals maintaining an interest, or subscribing to emails.’ |

|

2. Moderate (Developing) N=63; (39%) |

Some systems and processes are documented, which enables research to be disseminated. There is little or no evidence of analysis or impact assessment. |

‘We have two people that email CRC updates to staff.’ ‘Lots of material is distributed via our portal and email to keep staff and volunteers informed.’ |

|

3. Intermediate |

There are established processes in place for reviewing research (e.g. dissemination and review either through job responsibilities or an internal research committee). No evidence of how the findings are translated or connected to operational activities. |

‘Developed a research committee.’ ‘SMEs appointed as capability custodians to ensure up-to-date best practice.’ |

|

4. High (Leading) N=23 (14%) |

There is evidence of active connections between research and operational activities. Operational and strategic decisions are informed by assessing research using formal research utilisation processes. These processes and systems are widely understood and embedded in multiple areas of practice. |

‘… a process of ensuring results are read by key specialist staff involved in program design and delivery, are interpreted and analysed for their implications and relevance and then used to inform decision-making and strategy through numerous internal fora.’ ‘Alignment of evidence-based decision-making in the planning phases of annual planning and the development of indicators around causal factors that inform emergent risk.’ |

Other ways included keeping abreast of the research from emails or other forms of information dissemination. An analysis of the comments shows that some agencies have formalised processes in place to discuss and review research while other agencies leave this up to individual personnel (Table 1).

Given the importance of the methods agencies use to keep up-to-date with research these comments were further analysed. Four themes emerged that could be identified as developmental in terms of a new variable labelled research utilisation maturity. Examples of these developmental levels are presented in Table 1.

The comments were given a ranking of research utilisation maturity indicated in Table 1. The variable (research utilisation maturity) was added to the database and used to further analyse and compare quantitative responses.

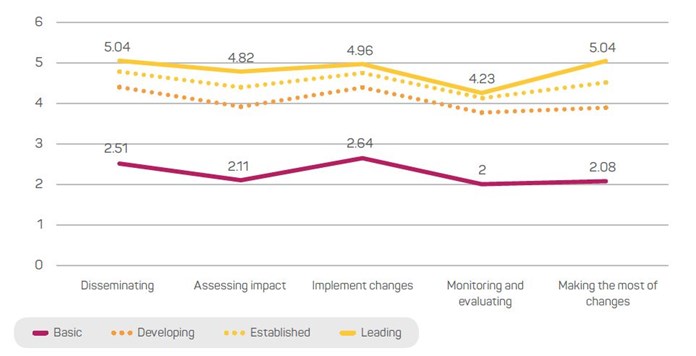

Figure 2 shows the mean scores for each of the coded research utilisation maturity groups. Those coded to the high research utilisation maturity group rated significantly higher levels of perceived effectiveness on all processes associated with learning from research outputs compared to the lower ranked group. Responses on the utilisation maturity framework yielded statistically significant results for perceptions of effectiveness in disseminating research within the agency,4 assessing and evaluating the impact on agency practice of research,5 implementing any changes that may be needed,6 putting in place monitoring processes to track changes7 and communicating outcomes of changes made as a result of research.8 Respondents were asked to rate the degree to which they thought their agency was one that exemplified a learning organisation. This was defined as an organisation that learns from experience of its members or learns from the experience of others.8

Respondents reporting strategies that were coded to the higher level of research utilisation maturity rated their organisations as significantly higher than those coded to lower levels of research utilisation maturity.9

These findings suggest that the approaches discussed by those in the higher research utilisation maturity group may provide insights for others. Leading agencies were ones that had:

- Established governance processes. They have established governance processes where business goals include research review (e.g. such as having a research review committee and a research framework as part of the business strategy). They also have active connections between research engagement and operations.

- Utilisation embedded into job roles. People have responsibilities for learning and review built into their job roles and into their group work. There is a widespread expectation that all personnel are responsible for learning and innovation and will adopt evidence-informed processes. This is supported by access to professional development opportunities.

- Active testing of outputs. They actively engage in testing outputs rather than accepting off-the-shelf products. They consult widely and know where to go for help and can access networks of expertise (internal or external to the agency) when needed.

- Communities of practice. They are actively engaged in agency and sector communities-of-practice (including other industries such as health) to communicate and innovate. They recognise that there are no magic solutions and they are able to articulate what is not known, problematic or uncertain that needs investigation. They recognise that learning is a process of continuous improvement.

Discussion

The differences reported between agency hierarchical roles suggests communication between senior management, middle management and front-line service roles needs attention. While it is reasonable to conclude that the onus of decision-making to determine if a change in practice is warranted will remain with senior personnel, if those in front-line positions are not as familiar with research outputs, it will be difficult for them to bring the required changes into practice. A focus on dissemination of research outputs to those responsible for front-line service delivery may be helpful.

In addition, agencies reporting higher levels of research utilisation maturity provide insights for others. It is important to recognise that change and innovation is developmental and requires adjustments to governance processes, job responsibilities and participation in communities-of-practice. These findings indicate that it may be possible to develop an adapted scale of organisational maturity to assess and measure research utilisation. Further research would identify agency profiles of maturity in research utilisation so that appropriate supports can be facilitated.

These findings suggest that more attention on how organisations learn to utilise research is required. Given the significant scrutiny placed on organisations and the emergency services sector as well as the pressure to demonstrate an evidence-base to practice, having a strong approach to research utilisation would seem essential. The study also suggests some other implications for future consideration

- Who is doing the utilisation and for whom? Are the same utilisation processes used for all research outputs or are different approaches needed, depending on the outputs? Is there a double edge to drawing on the perceptions of the ‘thought leaders’ who have been working in the agency for 20+ years, given that they are likely to be enculturated into established ways of seeing the world?

- What is being utilised from research? Are some research outputs easier (or acceptable) to utilise than others? Are there insights and outputs from research that are not utilised and why is this the case?

- Why are some barriers to utilisation more impervious to change? Are there research problems where proposed utilisation of insights or outputs is stifled?

Figure 2: Perceptions of effectiveness for four identified levels of agency research utilisation maturity.

Implications for future research from these findings suggest there is a need to tease out the elements that comprise learning and innovation cultures and what skills, processes and structures are needed. Further work is needed to better understand how perceived barriers can be overcome in order to increase and strengthen cultures of learning within agencies and the sector. Doing so will support goals of agility and innovation within the sector through research utilisation, which include the acceleration of adoption, maximising the value of research and increasing the worthiness of innovation.

It is vital that agencies—and the sector—builds capability in developing robust processes of deliberative review, assessment and evaluation so that evidence-informed practice can be demonstrated. This is necessary if the sector and involved agencies are to reap the full benefits of research.

Footnotes

- Paired t-test: Disseminate the Bushfire CRC research within the agency (M = 3.97, SE = 0.109) and Assess and evaluate the impact of the research in agency practice (M = 3.57; SE = 0.104), t (239) = 5.955, p = 0005, r = .81

- Paired t-test: Disseminate the Bushfire CRC research within the agency (M = 3.99, SE = 0.108) and Put in place monitoring processes to track changes (M = 3.44; SE = 0.106), t (233) = 6.208, p = 0005, r = .66

- ANOVA (F(2, 186) = 4.356, p <.014, ω =.045

- ANOVA (F(3, 155) = 24.987, p <.0005, ω =.326

- ANOVA (F(3, 147) = 28.614, p <.0005, ω =.369

- ANOVA (F(3, 146) = 25.762, p <.0005, ω =.346

- ANOVA (F(3, 143) = 20.360, p <.0005, ω =.299

- ANOVA F(3, 151) = 31.516, p <.0005, ω =.385

- ANOVA (F(3, 147) = 14.5072, p < .0005, ω =.228